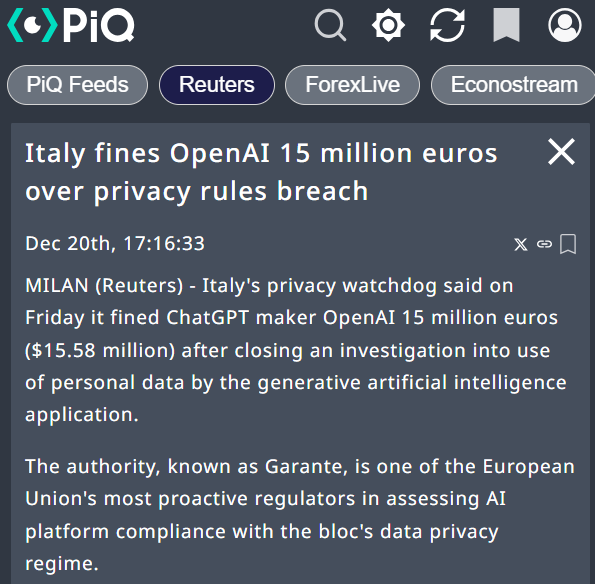

In a landmark ruling that is sending shockwaves across the global tech landscape, Italy’s Data Protection Authority (IDPA) has fined OpenAI a staggering 15 million euros ($15.7 million) over breaches of data protection and privacy laws. This fine is the result of an extensive investigation into OpenAI’s flagship product, ChatGPT, and highlights growing concerns about how artificial intelligence (AI) companies handle personal data. The investigation, which began in March 2023, uncovered serious lapses in transparency, data collection practices, and safeguards against underage usage of the AI service.

The Breach: OpenAI’s Missteps in Data Protection

The IDPA’s findings paint a troubling picture of OpenAI’s handling of user data. One of the primary reasons for the fine was OpenAI’s failure to notify the Italian data protection agency about a significant data breach that occurred in March 2023. Under European Union law, companies are required to promptly inform relevant authorities of any data breaches, especially when personal data is compromised. OpenAI’s failure to do so has drawn sharp criticism and put its practices under intense scrutiny.

Further complicating matters, the IDPA found that OpenAI had been using personal data to train ChatGPT without obtaining an adequate legal basis for doing so. According to the watchdog, OpenAI violated the fundamental principle of transparency, failing to inform users about how their personal data was being used to enhance its generative AI model. This lack of clear communication between OpenAI and its users has raised alarms about data privacy in the rapidly advancing AI field.

Italy’s data protection authority has fined OpenAI €15 million ($15.66 million) for improper handling of personal data by its generative AI application, ChatGPT.

The penalty follows an investigation launched nearly a year ago which found that ChatGPT violated the EU’s General… pic.twitter.com/ko9YMo4Ua2

— GuardingPearSoftware (@GuardingPearSof) December 23, 2024

Lack of Age Verification: Exposing Minors to Unfit Content

Another major issue uncovered during the investigation was OpenAI’s failure to implement adequate age verification mechanisms to prevent underage users from accessing its services. The IDPA specifically pointed out that OpenAI did not have systems in place to ensure that users under the age of 13 were not exposed to potentially harmful or inappropriate content. Given that ChatGPT interacts with users in a conversational manner, the risk of exposing minors to inappropriate responses is a serious concern.

This oversight could have far-reaching implications, as many minors, lacking full self-awareness and the cognitive maturity to engage with the AI responsibly, may unknowingly engage in conversations that are unsuitable for their developmental stage. The IDPA has strongly emphasized the need for OpenAI to address these vulnerabilities, warning that such risks could undermine the safety of young users.

A Public Awareness Campaign: Ripple Effects Across the Industry

As part of the corrective measures, the IDPA has mandated that OpenAI conduct a six-month public awareness campaign. This campaign, which will span multiple platforms including radio, television, newspapers, and the internet, aims to raise public awareness about how ChatGPT functions, the data collection processes behind it, and the rights users have under the European Union’s General Data Protection Regulation (GDPR).

The awareness campaign will also focus on educating users about how they can exercise their rights, including opposition, rectification, and cancellation of their data from generative AI training. The IDPA’s decision to enforce such a campaign signals the increasing importance of transparency in AI development and the need for companies like OpenAI to foster a deeper understanding of data usage and privacy issues among their users.

The campaign is expected to run for six months, with the goal of ensuring that users are fully informed about how their data is used and how they can protect their personal information. By the end of the campaign, users should know how to oppose the use of their data for training purposes and exercise their rights under the GDPR.

The IDPA’s decision to fine OpenAI is a powerful reminder of the strict penalties companies can face for violating the GDPR. Under the regulation, companies that fail to comply with data protection laws can face fines of up to 20 million euros or 4% of their global turnover—whichever is higher. While the fine imposed on OpenAI is significant, it could have been much larger had the company not demonstrated a “collaborative attitude” during the investigation, which reportedly helped to reduce the penalty.

Related news: XRP Powers Ripple’s Bold $5M Donation to Trump Inauguration: A Strategic Move